Miroslav Smatana (TUKE)

Machine learning gives computers the ability to learn without being explicitly programmed.

Learning is enhancing performance in certain environment by gaining knowledge from experience in this environment.

Computer programs is able to learn from experience (training examples), if its performance increased during solution of a class of tasks thanks to give experience.

History of terms

Data mining

* Estimation of orbits of comets and planets against the sun

Big data

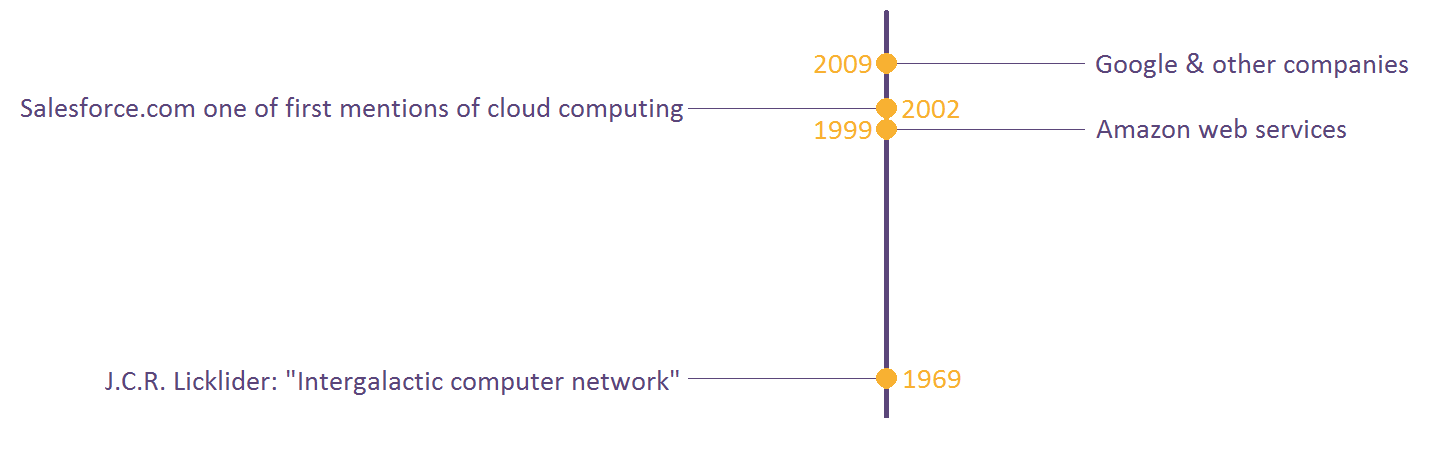

Cloud computing

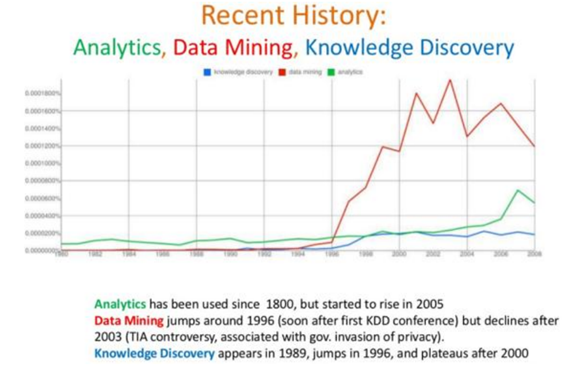

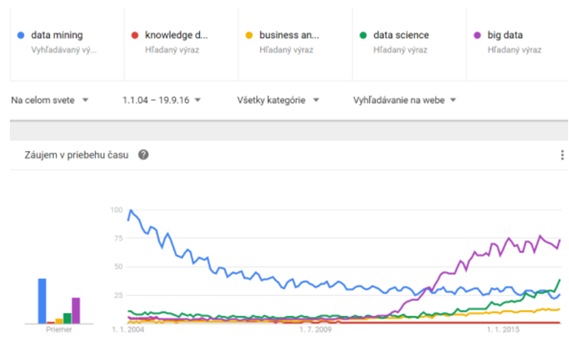

Historical use of relevant terms

How we use the words:

Explanation of terms

Cloud

- Service on demand - modular solutions based on open platforms

- Internet access - thin client is sufficient for access to cloud, emphasis on security and compliance with standards

- Payment for utilised resources - optimisation of operational cost

- Scalability and elasticity - possibility of flexible adding and removing resources, capacities, services

- Accumulating and sharing of resources - resistance of the solution against outage

Big data

Definition: Big data aredata that cannot processed by common means in requested time due to their volume, velocity of updates and or variety

3V/5V models characteristics:

- Volume - size of accumulated and processed data in GB/TB/PB

- Velocity - speed of generating data and how fast must the data be proocessed (data are updated fast: updates themselves can be small in volume)

- Variety - it is necessary to process data of various types (structured data from databases, texts, multimedia, sensory data etc.) type of data can change

- [Veracity] - data may be inconsistent,faulty, the source is untrustworthy

- [Value] - data are accumulated and processed to gain new knowlledge that can be applied effectively: accumulation of data must be potentially useful

knowledge discovery

Knowledge discovery in databases is a process of semi-automatic extraction of knowledge from databases. Knowledge must be:

- Valid (in statistical meaning)

- Yet unknown

- Potentially useful (forgiven applicatio

Knowledge discovery is an iterative and interactive process. Mainly the following apply:

- Statistics

- Machine learning

- Database systems

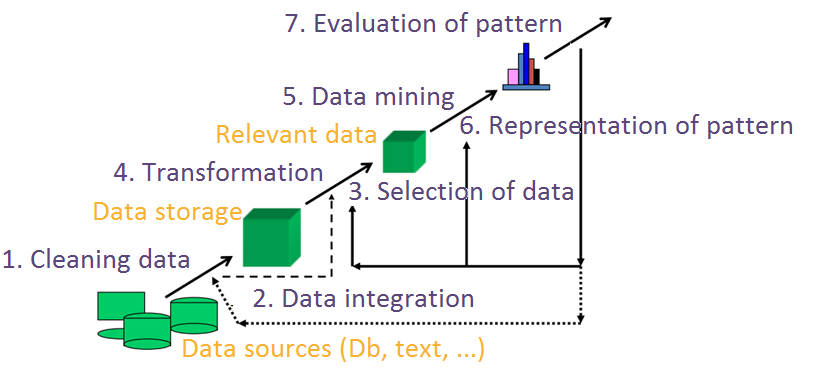

Process

- Understanding application and current knowledge (existing relevant knowledge and the aim of knowledge discovery process)

- Cleaning data (removing inconsistent data)

- Data integration from several sources (often heterogenous)

- Selection of data relevant for the given aim (attribute analysis)

- Data transformation into representation suitable for the given knowledge discovery process aim (eg. discretisation)

- Data mining - application of intteligent methods to gain valid patterns (the most important data mining tasks are description,association rules, classification/prediction and clustering

- Evaluation of found patterns - application of chosen scales

- Presentation of patterns - methods of knowledge representation and its visualisation (explicit knowledge)

- Use of discovered knowledge in given application

Machine learning

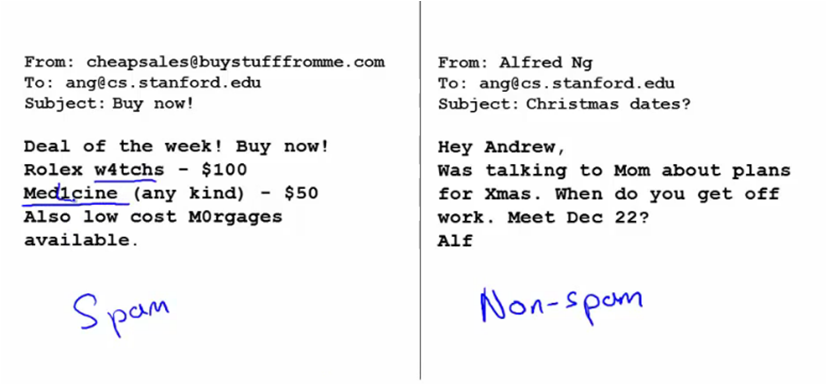

We are using machine learning everyday:

- Search engines (let's tell Google what is relevant link)

- Smap filter (mark spam and leave computer to understand why)

- Apple tagging pictures

- ...

We cannot code everything (algorithms are not working):

- Autonomous helicopter

- Written text recognition

- Processing of natural language

- ...

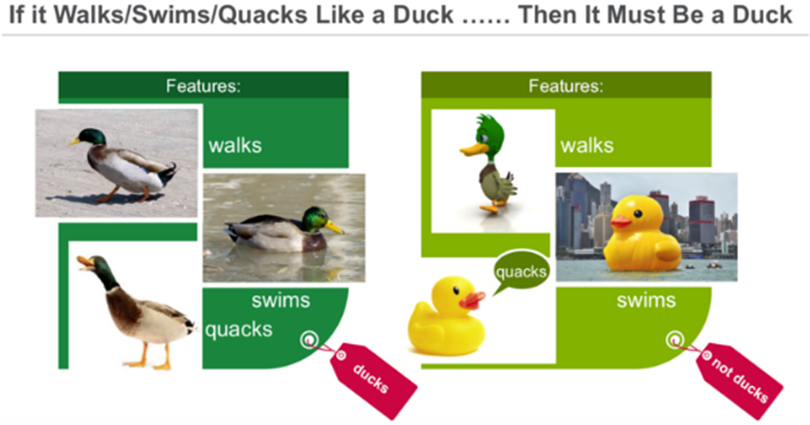

Categories of machine learning:

Example of supervised predictional learning (usually there are more dimensions and trend lines are not such easy):

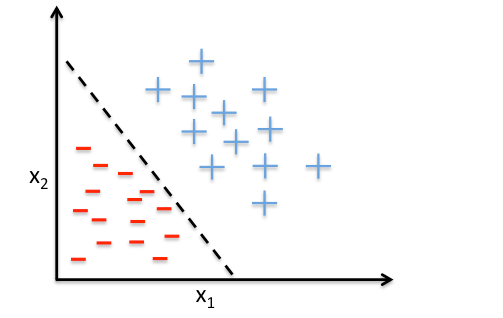

Example of a linear decision boundary for binary classification:

Example of unsupervised laerning: clustering/segmentats identification:

Case studies

Application areas:

- Marketing (segmentation of customers, optimisation of marketing campaigns)

- Uncovering frauds (credit card transactions, mobile telecommunication networks)

- Targetedadvertifins (based on observation of personal behaviour on web, recommendation systems)

- Scientific application (medicine)

- ...

Affinio

Segment people to communities based on their preferences:

- Understanding customers based on their behavior

- Targeted marketing

- Identification of the most suitable distribution channel and reaching to customers

Prelert

- Behaviour analysis for security of payments

- Detect a possible insider trading in a stock market

Barilliance

- Personalised recommendation of products

- Sending emails with relevant content

- Recommendation directly on Web

Read more

https://rayli.net/blog/data/history-of-data-mining/

http://www.forbes.com/sites/gilpress/2013/05/09/a-very-short-history-of-big-data/#548f0fed55da

http://www.computerweekly.com/feature/A-history-of-cloud-computing